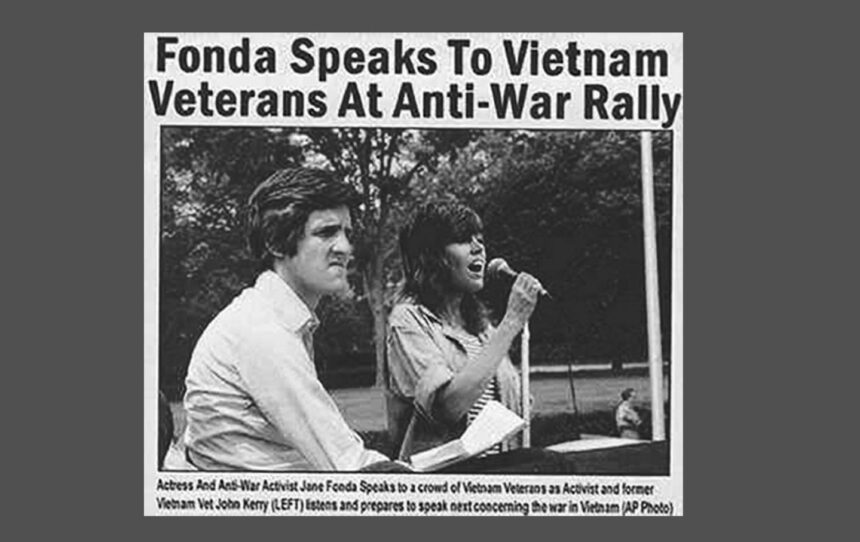

In 2004, a doctored political picture brought about outrage and confusion. Two decades later, why hasn’t visible literacy stepped forward?

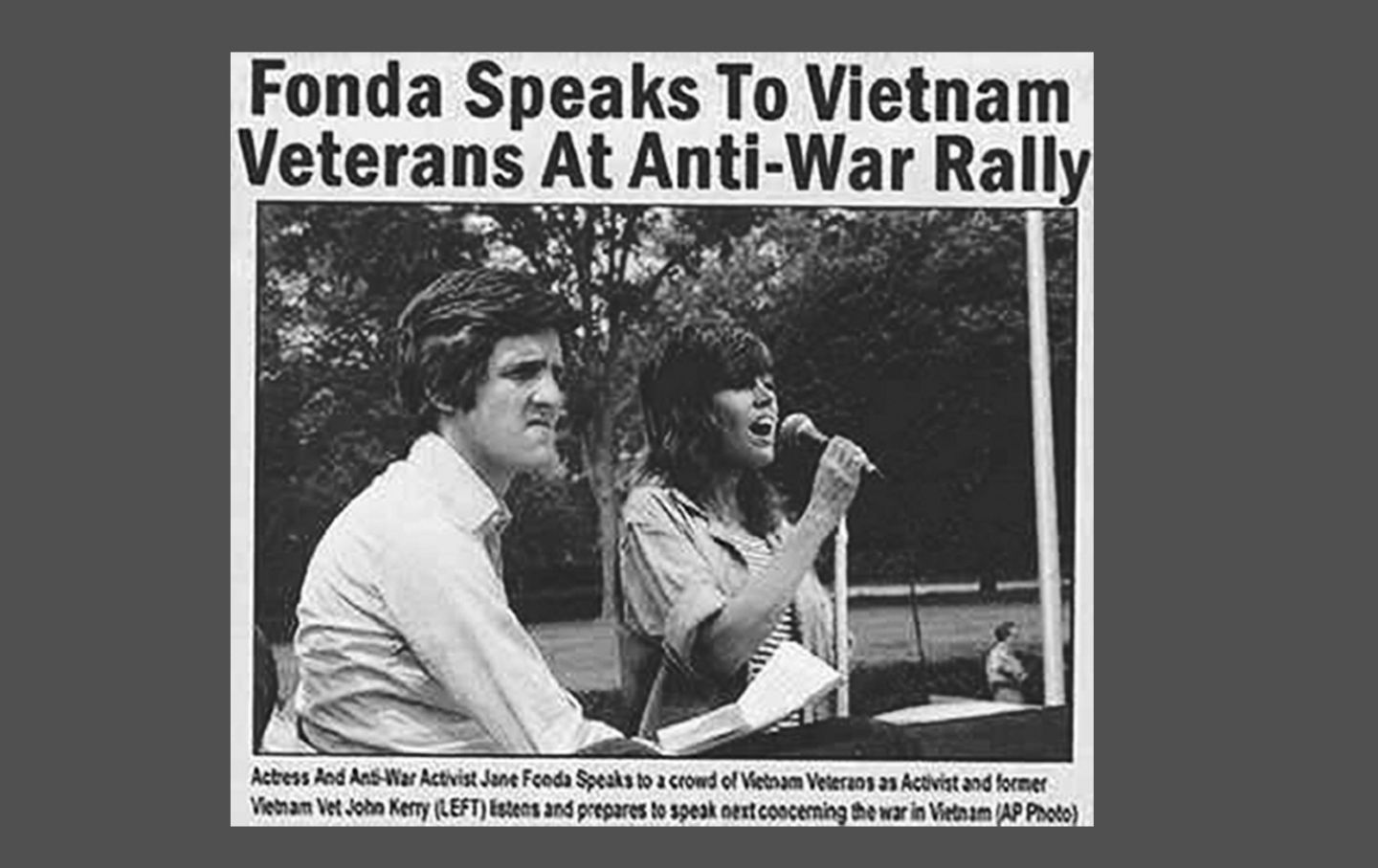

Symbol created via merging two images, appearing presidential candidate John Kerry and actress Jane Fonda, who campaigned in opposition to the Vietnam Warfare within the Seventies.

(Public Area)

In 2004, a picture of John Kerry and Jane Fonda circulated on-line, in newspapers, and on cable TV. The 2 gave the impression to be sharing an out of doors level someday throughout the past due Sixties or early ’70s. Conservative pundits like Rush Limbaugh and Sean Hannity went loopy over the picture: It looked as if it would counsel that the centrist Vietnam vet Kerry had aligned himself with “Hanoi Jane” and the “traitorous” a ways left. The New York Instances revealed a work concerning the image, outlining conservatives’ seek for a connection between Kerry and Fonda, after which every week later, the picture and its credit score from AP Information have been debunked as fakes. What used to be at paintings right here used to be no longer proof of peers in hands, however relatively truly just right Photoshop.

If I presented this picture with that description to a school room of my school scholars, they may instantly go to sleep: In spite of everything, best the oldest amongst them are able to dreaming concerning the former Democratic presidential candidate fabricated from ketchup and wooden. And via now, they’re additionally inured to faux photographs made via AI, which they see in every single place their Instagram and TikTok feeds. However in 2004, this altered picture used to be information, an election-year flashpoint that looked as if it would display Kerry’s radical roots as he attempted to enchantment to the rustic’s middle.

Final 12 months, after the tried assassination of Donald Trump, photographs in an instant circulated on-line of the president elevating a defiant fist, flanked via Secret Provider brokers. In fairly other variations of this picture, the brokers are smiling, prompting hypothesis on each side of the political spectrum that the assassination try would possibly had been faked to reinforce Trump’s platform. Quickly after, the smiling picture too used to be deemed a faux. The happy-agents model were altered via any person at the Web the use of AI.

Those two picture snafus passed off twenty years aside. In that span of time, we’ve got observed a technological revolution: the upward push of smartphones, social media platforms, self-driving automobiles, drones, VR headsets, 3-D printers, hoverboards (however no longer the sort all of us in reality sought after), and extra. However what, shockingly, hasn’t looked as if it would trade, is our visible literacy. Simply as in 2004, the possible balloting public in 2024 used to be nonetheless duped via an image roaming across the Internet. How come we didn’t be informed our lesson?

Worry over what the virtual revolution would possibly imply for images, and the way the cultural relevance of the {photograph} would trade, were mounting for no less than twenty years by the point the Kerry/Fonda picture first gave the impression on a conservative website online known as “vietnamveteransagainstjohnkerry.

com.” (However don’t pass there on the lookout for proof… it’s these days a parked hub for what seems to be an Indonesian playing web site).

The primary time we truly began speaking about the potential of digitally manipulating images used to be in 1982, when a Nationwide Geographic quilt confirmed camel riders in entrance of the pyramids at Giza and, in a while after newsletter, it got here to gentle that the mag had used new virtual modifying device to transport the pyramids nearer in combination, with out the photographer’s permission. Former Nat Geo editor in leader Susan Goldberg mentioned this incident a technology later in a 2016 piece for the mag and claimed that “a deserved firestorm ensued,” within the ’80s, together with from inside the newsletter itself, which brought about the mag to temporarily opposite its stance on changing images. This unease concerning the chance that our personal eyes may just idiot us persisted because the millennium approached. There used to be popular fear amongst scientists and govt businesses that scientific and clinical effects might be faked via virtual photographs. In a 1994 factor of Science Mag, the authors of a work on “easy-to-alter virtual photographs” claimed that “virtual picture fraud may also be achieved and not using a hint.” Shocker of shockers, manipulated pictures started darkening the hallowed halls of tabloid mag tradition. In 1997, the Day-to-day Mail revealed pictures of Princess Diana weeks prior to her demise, artificially rotating Dodi Al Fayed’s head to make it seem like the 2 have been about to kiss—a preview of the entire Putin deepfakes and Studio Ghibli deportation memes to return.

Through 2003, virtual digital camera gross sales would outpace the ones of movie cameras for the primary time, most commonly the type of point-and-shoot fashions that it’s good to select up at a Easiest Purchase or Radio Shack for a couple of hundred greenbacks. Round this time, the troubles round virtual imagery looked as if it would shift from existential to extra explicit, and typically the general public furor across the misleading functions of those photographs subsided. There have been such a lot of moral discussions across the airbrushing of celebrities on mag covers, however much less, for some explanation why, about all the cave in of photographic fact.

One imaginable reason behind why this dialog died down within the aughts is that, as extra folks used virtual cameras and messed round with early variations of Photoshop, there used to be a collective realization that “faking” truth with the ones equipment used to be nonetheless rather difficult, no less than to do smartly, and subsequently, fakes wouldn’t run rampant. Gawking at Photoshop fails stays a amusing strategy to kill time: The eerily stretched necks? The lacking hands that created a sleeker profile? Those photographs additionally end up how even pros, when rushed, may just warp the intended “fact” past believability. Nonetheless, in some way the pleased hunt to seek out those occasional mistakes in Photoshopping didn’t translate to the media literacy required for the brand new age of AI.

In fact, the barrier to access into picture manipulation has ceaselessly diminished over the past twenty years. Smartphone cameras made images extra available, inflicting a spike within the choice of photographs made and ate up. During the 2010s, when folks began the use of Instagram and Snapchat, easy AI filters have been presented that made minor changes like blurring backgrounds, disposing of figures, or including animal ears to a human head a cinch. Since then, device like Midjourney, which permits for the introduction of photographic-looking photographs by no means captured via a digital camera, has made headlines, however use of AI has been woven into the techniques we use for for much longer.

In a super international, as kids started spending extra time on-line eating photographs, introductory arts training would have allowed for vigorous school room discussions of what it method for {a photograph} to “inform the reality” as youngsters’ worlds turned into more and more image-saturated.

In fact, the 2000s didn’t see an explosion of investment for humanities training, with the No Kid Left At the back of act prioritizing topics which may be lined on a standardized take a look at. At the same time as youngsters have been anticipated to are living in an more and more image-saturated international, categories the place photographs have been mentioned severely have been handled as additional. Even for college kids who went directly to four-year schools, common training necessities incessantly best allowed for one or two arts categories if scholars sought after to graduate in 4 years.

Ensuring maximum younger folks gained a crash path in media literacy didn’t wish to imply extra images majors (despite the fact that, as a photograph professor, I don’t hate that concept). However any other just right resolution would’ve been to recognize visible literacy problems throughout curricula: in civics categories, in arts categories, hell, even in homeroom. There used to be such an unaddressed want, typically, to cope with the truth that scholars are bombarded via visible knowledge and may just get pleasure from finding out to research it extra severely.

Instructing youngsters must had been the clean section. An enormous hole in visible literacy exists amongst those that have been full-blown adults throughout the virtual revolution, and who at the moment are a number of the least ready to research AI photographs. I lately scrolled previous a bunch of pictures on my social media feed, shared via a pal in her 70s, appearing Bruce Springsteen and Bob Dylan status aspect via aspect. 3 photographs gave the impression to be images taken throughout concert events, however in a fourth picture, Springsteen is urgent a typically stoic outdated Bob’s weeping face to his breast. The put up’s obviously AI-generated caption weaved a story of what the viewer used to be supposedly witnessing: “Later, behind the scenes, Dylan checked out him and mentioned, ‘If there’s ever anything else I will do for you…’ Springsteen, just about speechless, answered, ‘You already did.’”

Fashionable

“swipe left underneath to view extra authors”Swipe →

Whilst this article reads like the type of sparkly fan fiction that might ignite most of the people’s intrinsic suspicions, there used to be debate within the feedback segment over the picture’s veracity—or if the reality right here even mattered. “I completely purchase Dylan crying and Bruce comforting him,” one commenter wrote. “And even supposing it’s AI, so what? It’s a just right message.”

It’s now imaginable to sort a brief sentence, hit that you simply’d like the picture to seem “photographic,” and create a scene that by no means came about. On occasion, the pictures display mistakes that trace at their AI origins, however an increasing number of, the pictures learn as best possible, stock-photo high quality renderings of the instructed. The only giveaway is that AI photographs are incessantly too best possible, too on-the-nose. Over the previous couple of years, many Pinterest customers have complained that, as an alternative of the real-world inspiration that drew them to the platform, they’re now bombarded via fictional slop of gardens, clothes, and different “hand-crafted” items created totally via AI. An unfathomable choice of photographic photographs have been already being created on a daily basis, and now, new technology era has multiplied past comprehension.

One vital lesson that all of us must have realized over the past technology of fast picture manufacturing, manipulation, and intake is that images, and photographs basically, by no means precisely display “the reality.” As Susan Sontag wrote in her essay “In Plato’s Cave,” “Even if there’s a sense through which the digital camera does certainly seize truth, no longer simply interpret it, images are as a lot an interpretation of the sector as art work and drawings are.”

Even essentially the most immediately documentary {photograph} must be considered with the data that, simply out of body, one thing lingers that might trade all the narrative. These days, we’re compelled to cope with AI fashions that have been skilled on a financial institution of pictures, maximum of them images, and a brand new constellation of images swallowed after which reconstituted into new bureaucracy that we must had been extra ready to interpret.

The query raised via the Bruce-caressing-Bob supporter’s remark is a query price asking. Does it topic if the picture used to be made via AI? And what must we carry to the desk as audience who will have to confront hundreds of pictures on a daily basis? Whilst the solutions are vital, it’s extra an important that all of us reckon with the questions, and that comes with the children that we’re throwing into this complicated pictorial ocean. The function shouldn’t be to steer clear of ever being “duped.” Any person who persistently aces the ones “AI or picture” quizzes must be very pleased with themselves, however there additionally isn’t any such factor as an infallible viewer. What’s maximum vital is that we’re all the time wondering photographs, considering deeply about them, and figuring out how what they keep up a correspondence might be transformative. Probably the most bad factor an individual may also be is an off-the-cuff, uncritical viewer.

Donald Trump needs us to simply accept the present scenario with out creating a scene. He needs us to consider that if we withstand, he’ll harass us, sue us, and minimize investment for the ones we care about; he would possibly sic ICE, the FBI, or the Nationwide Guard on us.

We’re sorry to disappoint, however the reality is that this: The Country gained’t back off to an authoritarian regime. Now not now, no longer ever.

Each day, week after week, we will be able to proceed to submit really impartial journalism that exposes the Trump management for what it’s and develops tactics to gum up its equipment of repression.

We do that via outstanding protection of struggle and peace, the exertions motion, the local weather emergency, reproductive justice, AI, corruption, crypto, and a lot more.

Our award-winning writers, together with Elie Mystal, Mohammed Mhawish, Chris Lehmann, Joan Walsh, John Nichols, Jeet Heer, Kate Wagner, Kaveh Akbar, John Ganz, Zephyr Teachout, Viet Thanh Nguyen, Kali Holloway, Gregg Gonsalves, Amy Littlefield, Michael T. Klare, and Dave Zirin, instigate concepts and gas revolutionary actions around the nation.

And not using a company pursuits or billionaire house owners at the back of us, we want your lend a hand to fund this journalism. Probably the most tough manner you’ll be able to give a contribution is with a routine donation that we could us know you’re at the back of us for the lengthy struggle forward.

We wish to upload 100 new maintaining donors to The Country this September. In case you step up with a per 30 days contribution of $10 or extra, you’ll obtain a one-of-a-kind Country pin to acknowledge your useful enhance for the loose press.

Onward,

Katrina vanden Heuvel

Editor and Writer, The Country

Extra from The Country

Sysco’s marketplace dominance implies that one thing very important is being misplaced. As native companies fade away, a way of a definite regional and native identification disappears with them.

As gold costs spike around the globe, unlawful mining is exploding—and riding the west African nation towards ecological cave in.

The college has in large part complied to the federal government’s efforts to reshape upper training as critics on campus query the function of neutrality altogether.

The white Christian nationalist provocateur wasn’t a promoter of civil discourse. He preached hate, bigotry, and department