BBC Check

BBC

BBCA wave of disinformation has been unleashed on-line since Israel started moves on Iran closing week, with dozens of posts reviewed by means of BBC Check in the hunt for to enlarge the effectiveness of Tehran’s reaction.

Our research discovered quite a lot of movies – created the usage of synthetic intelligence – boasting of Iran’s army features, along pretend clips appearing the aftermath of moves on Israeli objectives. The 3 maximum considered pretend movies BBC Check discovered have jointly gathered over 100 million perspectives throughout a couple of platforms.

Professional-Israeli accounts have additionally shared disinformation on-line, basically by means of recirculating outdated clips of protests and gatherings in Iran, falsely claiming that they display mounting dissent towards the federal government and fortify amongst Iranians for Israel’s army marketing campaign.

Israel introduced moves in Iran on 13 June, resulting in a number of rounds of Iranian missile and drone assaults on Israel.

One organisation that analyses open-source imagery described the amount of disinformation on-line as “astonishing” and accused some “engagement farmers” of in the hunt for to make the most of the war by means of sharing deceptive content material designed to draw consideration on-line.

“We’re seeing the whole lot from unrelated pictures from Pakistan, to recycled movies from the October 2024 moves—a few of that have gathered over 20 million perspectives—in addition to sport clips and AI-generated content material being handed off as actual occasions,” Geoconfirmed, the net verification staff, wrote on X.

Sure accounts have transform “super-spreaders” of disinformation, being rewarded with vital enlargement of their follower depend. One pro-Iranian account without a obtrusive ties to government in Tehran – Day by day Iran Army – has observed its fans on X develop from simply over 700,000 on 13 June to at least one.4m by means of 19 June, an 85% build up in below every week.

It’s one many difficult to understand accounts that experience seemed in folks’s feeds not too long ago. All have blue ticks, are prolific in messaging and feature many times posted disinformation. As a result of some use apparently legit names, some folks have assumed they’re unique accounts, however it’s unclear who’s in truth operating the profiles.

The torrent of disinformation marked “the primary time we have observed generative AI be used at scale all through a war,” Emmanuelle Saliba, Leader Investigative Officer with the analyst staff Get Actual, advised BBC Check.

Accounts reviewed by means of BBC Check steadily shared AI-generated photographs that seem to be in the hunt for to magnify the good fortune of Iran’s reaction to Israel’s moves. One symbol, which has 27m perspectives, depicted dozens of missiles falling at the town of Tel Aviv.

Some other video purported to turn a missile strike on a construction within the Israeli town overdue at evening. Ms Saliba mentioned the clips frequently depict night-time assaults, making them particularly tricky to ensure.

AI fakes have additionally focussed on claims of destruction of Israeli F-35 fighter jets, a state-of-the artwork US-made aircraft in a position to hanging flooring and air objectives. If the barrage of clips had been actual Iran would have destroyed 15% of Israel’s fleet of the combatants, Lisa Kaplan, CEO of the Alethea analyst staff, advised BBC Check. We now have but to authenticate any pictures of F-35s being shot down.

One extensively shared put up claimed to turn a jet broken after being shot down within the Iranian wasteland. Alternatively, indicators of AI manipulation had been obtrusive: civilians across the jet had been the similar dimension as within sight cars, and the sand confirmed no indicators of have an effect on.

Some other video with 21.1 million perspectives on TikTok claimed to turn an Israeli F-35 being shot down by means of air defences, however the pictures in truth got here from a flight simulator online game. TikTok got rid of the pictures after being approached by means of BBC Check.

Ms Kaplan mentioned that one of the center of attention on F-35s used to be being pushed by means of a community of accounts that Alethea has prior to now connected to Russian affect operations.

She famous that Russian affect operations have not too long ago shifted route from looking to undermine fortify for the battle in Ukraine to sowing doubts concerning the capacity of Western – particularly American – weaponry.

“Russia does not in point of fact have a reaction to the F-35. So what it may it do? It may search to undermine fortify for it inside of sure nations,” Ms Kaplan mentioned.

Disinformation could also be being unfold by means of well known accounts that experience prior to now weighed in at the Israel-Gaza battle and different conflicts.

Their motivations range, however professionals mentioned some is also making an attempt to monetise the war, with some primary social media platforms providing pay-outs to accounts attaining massive numbers of perspectives.

Against this, pro-Israeli posts have in large part focussed on ideas that the Iranian govt is dealing with mounting dissent because the moves continuer

Amongst them is a extensively shared AI-generated video falsely purporting to turn Iranians chant “we adore Israel” at the streets of Tehran.

Alternatively, in contemporary days – and as hypothesis about US moves on Iranian nuclear websites grows – some accounts have began to put up AI-generated photographs of B-2 bombers over Tehran. The B-2 has attracted shut consideration since Israel’s moves on Iran began, as a result of it’s the simplest plane in a position to successfully sporting out an assault on Iran’s subterranean nuclear websites.

Professional assets in Iran and Israel have shared one of the pretend photographs. State media in Tehran has shared pretend pictures of moves and an AI-generated symbol of a downed F-35 jet, whilst a put up shared by means of the Israel Protection Forces (IDF) won a neighborhood notice on X for the usage of outdated, unrelated pictures of missile barrages.

A large number of the Disinformation reviewed by means of BBC Check has been shared on X, with customers steadily turning to the platform’s AI chatbot – Grok – to ascertain posts’ veracity.

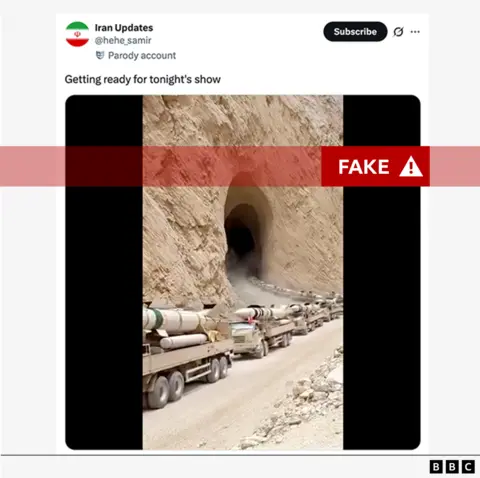

Alternatively, in some instances Grok insisted that the AI movies had been actual. One such video confirmed an unending movement of vans sporting ballistic missiles rising from a mountainside advanced. Inform-tale indicators of AI content material integrated rocks within the video shifting of their very own accord, Ms Saliba mentioned.

However in keeping with X customers, Grok insisted many times that the video used to be actual and cited experiences by means of media retailers together with Newsweek and Reuters. “Take a look at relied on information for readability,” the chatbot concluded in different messages.

X didn’t reply to a request from BBC Check for remark at the Chatbot’s movements.

Many movies have additionally seemed on TikTok and Instagram. In a remark to BBC Check, TikTok mentioned it proactively enforces neighborhood pointers “which restrict erroneous, deceptive, or false content material” and that it really works with unbiased reality checkers to “test deceptive content material”.

Instagram proprietor Meta didn’t reply to a request for remark.

Whilst the motivations of the ones growing on-line fakes range, many are shared by means of unusual social media customers.

Matthew Facciani, a researcher on the College of Notre Dame, urged that disinformation can unfold extra briefly on-line when individuals are confronted with binary alternatives, akin to the ones raised by means of war and politics.

“That speaks to the wider social and mental factor of folks short of to re-share issues if it aligns with their political id, and in addition simply basically, extra sensationalist emotional content material will unfold extra briefly on-line.”