On the middle of each empire is an ideology, a trust machine that propels the machine ahead and justifies enlargement – despite the fact that the price of that enlargement at once defies the ideology’s said undertaking.

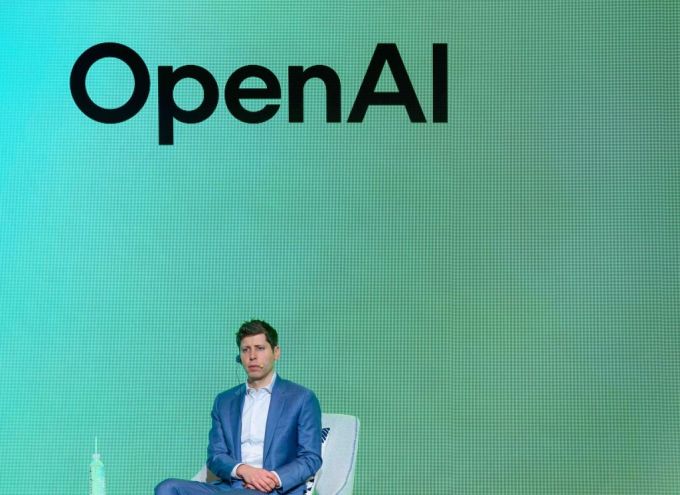

For Eu colonial powers, it was once Christianity and the promise of saving souls whilst extracting assets. For nowadays’s AI empire, it’s synthetic common intelligence to “get advantages all humanity.” And OpenAI is its leader evangelist, spreading zeal around the trade in some way that has reframed how AI is constructed.

“I used to be interviewing folks whose voices had been shaking from the fervor in their ideals in AGI,” Karen Hao, journalist and bestselling writer of “Empire of AI,” advised TechCrunch on a contemporary episode of Fairness.

In her guide, Hao likens the AI trade normally, and OpenAI specifically, to an empire.

“The one strategy to in point of fact perceive the scope and scale of OpenAI’s habits…is in fact to acknowledge that they’ve already grown extra robust than just about any country state on the planet, they usually’ve consolidated an peculiar quantity of no longer simply financial energy, but additionally political energy,” Hao mentioned. “They’re terraforming the Earth. They’re rewiring our geopolitics, all of our lives. And so you’ll be able to handiest describe it as an empire.”

OpenAI has described AGI as “a extremely self sufficient machine that outperforms people at maximum economically precious paintings,” one that can in some way “carry humanity via expanding abundance, turbocharging the economic system, and assisting within the discovery of latest medical data that adjustments the boundaries of risk.”

Those nebulous guarantees have fueled the trade’s exponential enlargement — its large useful resource calls for, oceans of scraped information, strained power grids, and willingness to liberate untested programs into the sector. All in carrier of a long run that many mavens say might by no means arrive.

Techcrunch match

San Francisco

|

October 27-29, 2025

Hao says this trail wasn’t inevitable, and that scaling isn’t the one strategy to get extra advances in AI.

“You’ll additionally broaden new tactics in algorithms,” she mentioned. “You’ll make stronger the present algorithms to scale back the volume of knowledge and compute that they wish to use.”

However that tactic would have supposed sacrificing pace.

“Whilst you outline the hunt to construct recommended AGI as one the place the victor takes all — which is what OpenAI did — then an important factor is pace over the rest,” Hao mentioned. “Velocity over potency, pace over protection, pace over exploratory analysis.”

For OpenAI, she mentioned, one of the best ways to ensure pace was once to take current tactics and “do just the intellectually affordable factor, which is to pump extra information, extra supercomputers, into the ones current tactics.”

OpenAI set the degree, and fairly than fall in the back of, different tech firms made up our minds to fall in line.

“And as the AI trade has effectively captured many of the best AI researchers on the planet, and the ones researchers not exist in academia, then you’ve a complete self-discipline now being formed via the schedule of those firms, fairly than via actual medical exploration,” Hao mentioned.

The spend has been, and can be, astronomical. Final week, OpenAI mentioned it expects to burn via $115 billion in money via 2029. Meta mentioned in July that it could spend as much as $72 billion on development AI infrastructure this 12 months. Google expects to hit as much as $85 billion in capital expenditures for 2025, maximum of which can be spent on increasing AI and cloud infrastructure.

In the meantime, the purpose posts stay shifting, and the loftiest “advantages to humanity” haven’t but materialized, even because the harms mount. Harms like activity loss, focus of wealth, and AI chatbots that gasoline delusions and psychosis. In her guide, Hao additionally paperwork employees in creating nations like Kenya and Venezuela who had been uncovered to demanding content material, together with kid sexual abuse subject matter, and had been paid very low wages — round $1 to $2 an hour — in roles like content material moderation and information labeling.

Hao mentioned it’s a false tradeoff to pit AI growth towards provide harms, particularly when different types of AI be offering actual advantages.

She pointed to Google DeepMind’s Nobel Prize-winning AlphaFold, which is educated on amino acid collection information and sophisticated protein folding buildings, and will now as it should be are expecting the 3-d construction of proteins from their amino acids — profoundly helpful for drug discovery and figuring out illness.

“The ones are the forms of AI programs that we’d like,” Hao mentioned. “AlphaFold does no longer create psychological well being crises in folks. AlphaFold does no longer result in colossal environmental harms … as it’s educated on considerably much less infrastructure. It does no longer create content material moderation harms as a result of [the datasets don’t have] all the poisonous crap that you just hoovered up whilst you had been scraping the web.”

Along the quasi-religious dedication to AGI has been a story concerning the significance of racing to beat China within the AI race, in order that Silicon Valley may have a liberalizing impact at the international.

“Actually, the other has took place,” Hao mentioned. “The distance has persisted to near between the U.S. and China, and Silicon Valley has had an illiberalizing impact at the international … and the one actor that has pop out of it unscathed, that you must argue, is Silicon Valley itself.”

After all, many will argue that OpenAI and different AI firms have benefitted humanity via liberating ChatGPT and different huge language fashions, which promise large positive aspects in productiveness via automating duties like coding, writing, analysis, buyer beef up, and different knowledge-work duties.

However the way in which OpenAI is structured — section non-profit, section for-profit — complicates the way it defines and measures its have an effect on on humanity. And that’s additional sophisticated via the scoop this week that OpenAI reached an settlement with Microsoft that brings it nearer to ultimately going public.

Two former OpenAI protection researchers advised TechCrunch that they concern the AI lab has begun to confuse its for-profit and non-profit missions — that as a result of folks revel in the usage of ChatGPT and different merchandise constructed on LLMs, this ticks the field of reaping rewards humanity.

Hao echoed those considerations, describing the hazards of being so ate up via the undertaking that fact is left out.

“Even because the proof accumulates that what they’re development is in fact harming important quantities of folks, the undertaking continues to paper all of that over,” Hao mentioned. “There’s one thing in point of fact unhealthy and darkish about that, of [being] so wrapped up in a trust machine you built that you just lose contact with fact.”