The subtitle of the doom bible to be printed via AI extinction prophets Eliezer Yudkowsky and Nate Soares later this month is “Why superhuman AI would kill us all.” Nevertheless it in reality must be “Why superhuman AI WILL kill us all,” as a result of even the coauthors don’t imagine that the sector will take the important measures to forestall AI from getting rid of all non-super people. The guide is past darkish, studying like notes scrawled in a dimly lit jail mobile the evening ahead of a crack of dawn execution. Once I meet those self-appointed Cassandras, I ask them outright in the event that they imagine that they for my part will meet their ends via some machination of superintelligence. The solutions come promptly: “yeah” and “yup.”

I’m now not stunned, as a result of I’ve learn the guide—the identify, via the way in which, is If Somebody Builds It, Everybody Dies. Nonetheless, it’s a jolt to listen to this. It’s something to, say, write about most cancers statistics and moderately every other to discuss coming to phrases with a deadly prognosis. I ask them how they believe the top will come for them. Yudkowsky to start with dodges the solution. “I do not spend numerous time picturing my loss of life, as it does not look like a useful psychological perception for coping with the issue,” he says. Underneath power he relents. “I’d wager all of sudden falling over lifeless,” he says. “If you need a extra obtainable model, one thing in regards to the measurement of a mosquito or possibly a dirt mite landed at the again of my neck, and that’s that.”

The technicalities of his imagined deadly blow delivered via an AI-powered mud mite are inexplicable, and Yudowsky doesn’t assume it’s well worth the bother to determine how that will paintings. He most probably couldn’t are aware of it anyway. A part of the guide’s central argument is that superintelligence will get a hold of clinical stuff that we will be able to’t comprehend any longer than cave other people may believe microprocessors. Coauthor Soares additionally says he imagines the similar factor will occur to him however provides that he, like Yudkowsky, does not spend numerous time living at the details of his loss of life.

We Don’t Stand a Likelihood

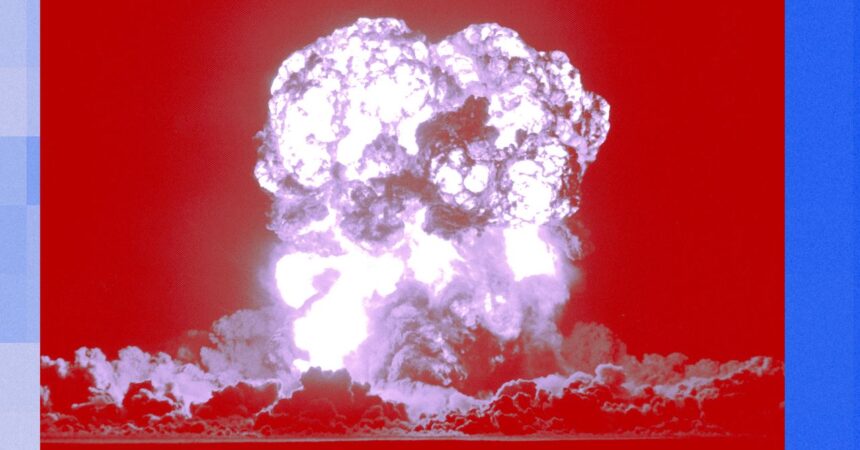

Reluctance to visualise the instances in their non-public loss of life is an atypical factor to listen to from individuals who have simply coauthored a whole guide about everybody’s loss of life. For doomer-porn aficionados, If Somebody Builds It is appointment studying. After zipping throughout the guide, I do perceive the fuzziness of nailing down the process in which AI ends our lives and all human lives thereafter. The authors do speculate just a little. Boiling the oceans? Blocking off out the solar? All guesses are most probably unsuitable, as a result of we’re locked right into a 2025 mindset, and the AI will likely be considering eons forward.

Yudkowsky is AI’s most famed apostate, switching from researcher to grim reaper years in the past. He’s even completed a TED communicate. After years of public debate, he and his coauthor have a solution for each and every counterargument introduced in opposition to their dire prognostication. For starters, it will appear counterintuitive that our days are numbered via LLMs, which steadily come across basic math. Don’t be fooled, the authors says. “AIs gained’t keep dumb without end,” they write. In the event you assume that superintelligent AIs will admire obstacles people draw, overlook it, they are saying. As soon as fashions get started instructing themselves to get smarter, AIs will broaden “personal tastes” on their very own that gained’t align with what we people need them to choose. Sooner or later they gained’t want us. They gained’t be focused on us as dialog companions and even as pets. We’d be a nuisance, and they might got down to do away with us.

The struggle gained’t be an excellent one. They imagine that to start with AI would possibly require human support to construct its personal factories and labs–simply completed via stealing cash and bribing other people to assist it out. Then it’s going to construct stuff we will be able to’t perceive, and that stuff will finish us. “A technique or every other,” write those authors, “the sector fades to black.”

The authors see the guide as more or less a surprise remedy to jar humanity out of its complacence and undertake the drastic measures had to forestall this unimaginably unhealthy conclusion. “I be expecting to die from this,” says Soares. “However the struggle’s now not over till you might be in reality lifeless.” Too unhealthy, then, that the answers they suggest to forestall the devastation appear much more far-fetched than the concept that instrument will homicide us all. All of it boils right down to this: Hit the brakes. Track knowledge facilities to ensure that they’re now not nurturing superintelligence. Bomb those who are not following the principles. Forestall publishing papers with concepts that boost up the march to superintelligence. Would they’ve banned, I ask them, the 2017 paper on transformers that kicked off the generative AI motion. Oh sure, they might have, they reply. As an alternative of Chat-GPT, they would like Ciao-GPT. Just right success preventing this trillion-dollar business.

Taking part in the Odds

In my opinion, I don’t see my very own mild snuffed via a chunk within the neck via some super-advanced mud mote. Even after studying this guide, I don’t assume it’s most probably that AI will kill us all. Yudksowky has up to now dabbled in Harry Potter fan-fiction, and the fanciful extinction eventualities he spins are too bizarre for my puny human mind to simply accept. My wager is that even though superintelligence does wish to do away with us, it’s going to stumble in enacting its genocidal plans. AI would possibly have the ability to whipping people in a struggle, however I’ll wager in opposition to it in a fight with Murphy’s regulation.

Nonetheless, the disaster concept doesn’t appear unattainable, particularly since no person has in reality set a ceiling for a way good AI can transform. Additionally research display that stepped forward AI has picked up numerous humanity’s nasty attributes, even considering blackmail to stave off retraining, in a single experiment. It’s additionally traumatic that some researchers who spend their lives construction and bettering AI assume there’s a nontrivial likelihood that the worst can occur. One survey indicated that just about part the AI scientists responding pegged the percentages of a species wipeout as 10 p.c likelihood or upper. In the event that they imagine that, it’s loopy that they move to paintings on a daily basis to make AGI occur.

My intestine tells me the eventualities Yudkowsky and Soares spin are too strange to be true. However I will’t be certain they’re unsuitable. Each and every creator desires in their guide being a long-lasting vintage. No longer such a lot those two. If they’re proper, there will likely be no person round to learn their guide sooner or later. Simply numerous decomposing our bodies that after felt a slight nip behind their necks, and the remaining used to be silence.