On November 2, 2022, I attended a Google AI match in New York Town. Probably the most topics used to be accountable AI. As I listened to executives speak about how they aligned their era with human values, I noticed that the malleability of AI fashions used to be a double-edged sword. Fashions may well be tweaked to, say, reduce biases, but in addition to implement a selected standpoint. Governments may just call for manipulation to censor unwelcome details and advertise propaganda. I envisioned this as one thing that an authoritarian regime like China may make use of. In america, after all, the Charter would save you the federal government from messing with the outputs of AI fashions created by means of personal firms.

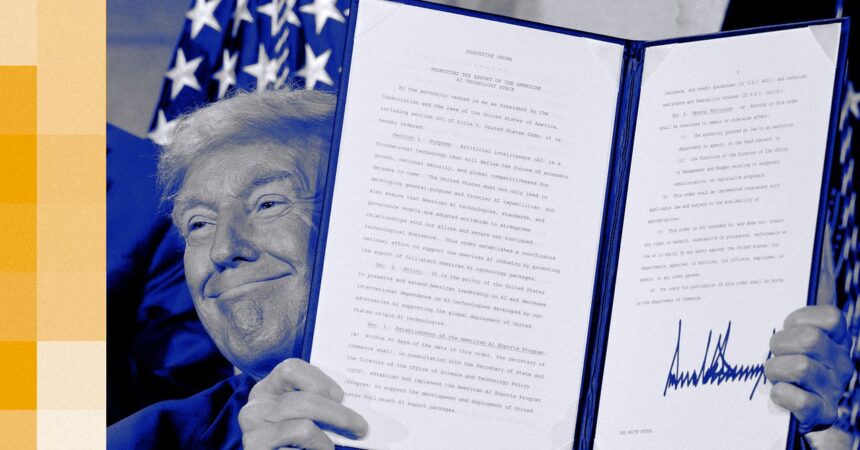

This Wednesday, the Trump management launched its AI manifesto, a far-ranging motion plan for one of the crucial necessary problems dealing with the rustic—or even humanity. The plan most often specializes in besting China within the race for AI supremacy. However one a part of it sort of feels extra in sync with China’s playbook. Within the title of fact, the United States govt now desires AI fashions to stick to Donald Trump’s definition of that phrase.

You gained’t to find that intent it appears that evidently said within the 28-page plan. As a substitute it says, “It is very important that those techniques be constructed from the bottom up with freedom of speech and expression in thoughts, and that U.S. govt coverage does now not intrude with that function. We will have to make certain that loose speech prospers within the technology of AI and that AI procured by means of the Federal govt objectively displays fact quite than social engineering agendas.”

That’s all fantastic till the closing sentence, which raises the query—fact in step with whom? And what precisely is a “social engineering time table”? We get a clue about this within the very subsequent paragraph, which instructs the Division of Trade to have a look at the Biden AI laws and “do away with references to incorrect information, Variety, Fairness, and Inclusion, and local weather alternate.” (Bizarre uppercase as written within the revealed plan.) Acknowledging local weather alternate is social engineering? As for fact, in a truth sheet in regards to the plan, the White Area says, “LLMs might be honest and prioritize historic accuracy, clinical inquiry, and objectivity.” Sounds excellent, however this comes from an management that limits American historical past to “uplifting” interpretations, denies local weather alternate, and regards Donald Trump’s claims about being The usa’s biggest president as function fact. In the meantime, simply this week, Trump’s Reality Social account reposted an AI video of Obama in prison.

In a speech touting the plan in Washington on Wednesday, Trump defined the good judgment in the back of the directive: “The American other folks don’t need woke Marxist lunacy within the AI fashions,” he stated. Then he signed an govt order entitled “Combating Woke AI within the Federal Govt.” Whilst specifying that the “Federal Govt will have to be hesitant to keep watch over the capability of AI fashions within the personal market,” it pronounces that “within the context of Federal procurement, it has the duty to not procure fashions that sacrifice truthfulness and accuracy to ideological agendas.” Since the entire large AI firms are relationship govt contracts, the order seems to be a backdoor effort to make certain that LLMs on the whole display fealty to the White Area’s interpretation of historical past, sexual identification, and different hot-button problems. In case there’s any doubt about what the federal government regards as a contravention, the order spends a number of paragraphs demonizing AI that helps range, calls out racial bias, or values gender equality. Pogo alert—Trump’s govt order banning top-down ideological bias is a blatant workout in top-down ideological bias.

Marx Insanity

It’s as much as the firms to decide easy methods to take care of those calls for. I spoke this week to an OpenAI engineer operating on type conduct who instructed me that the corporate already strives for neutrality. In a technical sense, they stated, assembly govt requirements like being anti-woke shouldn’t be an enormous hurdle. However this isn’t a technical dispute: It’s a constitutional one. If firms like Anthropic, OpenAI, or Google come to a decision to take a look at minimizing racial bias of their LLMs, or make a mindful selection to make sure the fashions’ responses mirror the risks of local weather alternate, the First Modification possibly protects the ones choices as exercising the “freedom of speech and expression” touted within the AI Motion Plan. A central authority mandate denying govt contracts to firms exercising that proper is the essence of interference.

Chances are you’ll suppose that the firms development AI would struggle again, mentioning their constitutional rights in this factor. However to this point no Giant Tech corporate has publicly objected to the Trump management’s plan. Google celebrated the White Area’s toughen of its puppy problems, like boosting infrastructure. Anthropic revealed a favorable weblog submit in regards to the plan, even though it complained in regards to the White Area’s surprising seeming abandonment of robust export controls previous this month. OpenAI says it’s already as regards to attaining objectivity. Not anything about announcing their very own freedom of expression.

In at the Motion

The reticence is comprehensible as a result of, total, the AI Motion Plan is a bonanza for AI firms. Whilst the Biden management mandated scrutiny of Giant Tech, Trump’s plan is a large fats inexperienced gentle for the trade, which it regards as a spouse within the nationwide combat to overcome China. It permits the AI powers to actually blow previous environmental objections when setting up huge information facilities. It pledges toughen for AI analysis that can drift to the personal sector. There’s even a provision that limits some federal finances for states that attempt to keep watch over AI on their very own. That’s a comfort prize for a failed portion of the new funds invoice that may have banned state law for a decade.

For the remainder of us, even though, the “anti-woke” order isn’t so simply dismissed. AI is an increasing number of the medium wherein we get our information and data. A founding concept of america has been the independence of such channels from govt interference. We’ve noticed how the present management has cowed guardian firms of media giants like CBS into it seems that compromising their journalistic ideas to choose company targets. Extending this “anti-woke” time table to AI fashions, it’s now not unreasonable to be expecting identical lodging. Senator Edward Markey has written immediately to the CEOs of Alphabet, Anthropic, OpenAI, Microsoft, and Meta urging them to struggle the order. “The main points and implementation plan for this govt order stay unclear,” he writes, “however it’s going to create important monetary incentives for the Giant Tech firms … to make sure their AI chatbots don’t produce speech that may dissatisfied the Trump management.” In a observation to me, he stated, “Republicans wish to use the facility of the federal government to make ChatGPT sound like Fox & Buddies.”

As chances are you’ll suspect, this view isn’t shared by means of the White Area staff operating at the AI plan. They consider their objective is right neutrality, and that taxpayers shouldn’t need to pay for AI fashions that don’t mirror impartial fact. Certainly, the plan itself issues a finger at China for instance of what occurs when fact is manipulated. It instructs the federal government to inspect frontier fashions from the Other people’s Republic of China to decide “alignment with Chinese language Communist Birthday celebration speaking issues and censorship.” Except the company overlords of AI get some spine, a long term analysis of American frontier fashions may smartly disclose lockstep alignment with White Area speaking issues and censorship. However chances are you’ll now not to find that out by means of querying an AI type. Too woke.

That is an version of Steven Levy’s Backchannel e-newsletter. Learn earlier protection from Steven Levy right here.